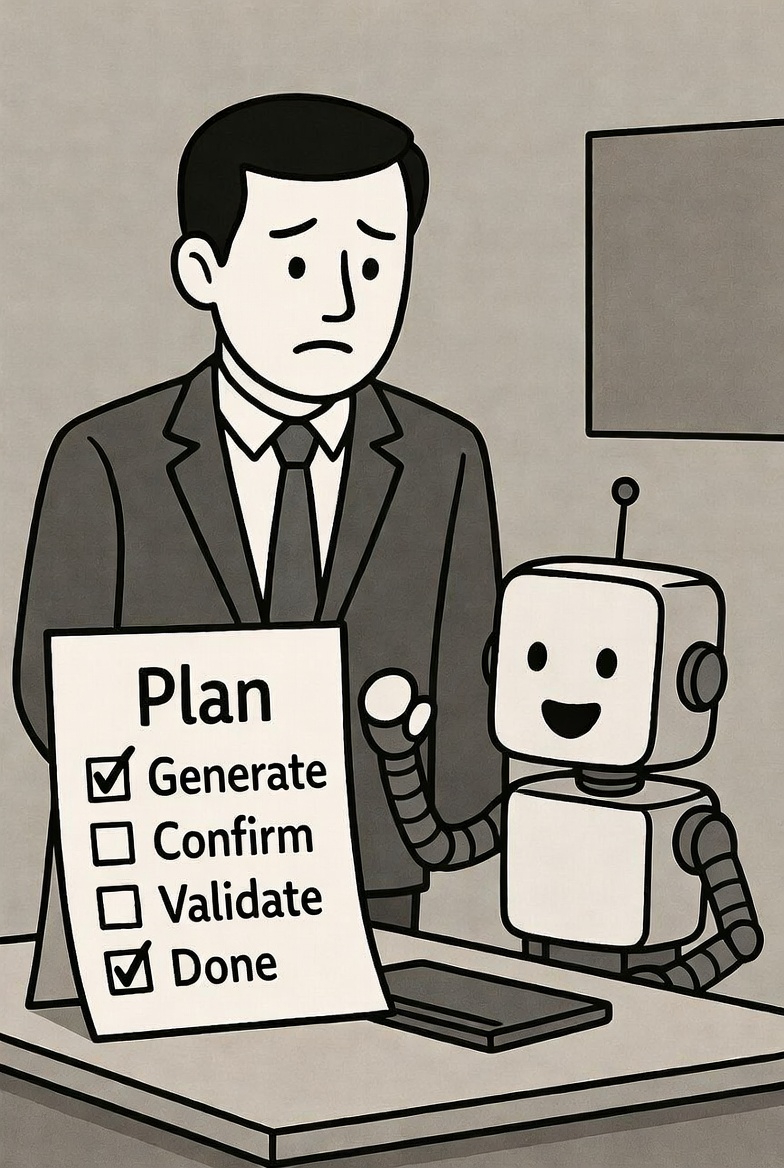

I’ve been building a rule-heavy review agent for public-API governance changes. Early phases (discovery, candidate generation) work reliably. Later phases—especially rigorous validation against the full rule set—frequently get ignored, skimmed, or half-done, even with explicit, repeated instructions to treat them as mandatory.

This feels like a classic symptom: the model excels at creative/open-ended early work but treats final checks as low-priority cleanup. Ramping up prompt emphasis (“this is critical”, “double-check every finding”) helps marginally but never reaches consistent 100% adherence across runs.

What finally broke the pattern:

- Phased execution with progressive instruction reveal

Split the workflow into isolated stages, using chained calls (or tool gates) to deliver fresh context and hidden plans only when needed:- Phase 1: Read artifacts + rules → generate candidates → append to file.

- Phase 2: Separate call reveals a “deep audit” plan (source-level validation of findings). The agent must then request the next plan.

- Phase 3: Final call reveals a “publish-ready” plan that re-reads rules and enforces per-finding checks before formatting output.

- Semantic framing: make validation sound non-negotiable

Even with phasing, full compliance only arrived after renaming:- “validate” → deep_audit

- “verify” → final_publish

Result: 95%+ automation on my daily 10–30 reviews, with the core drift problem largely solved. Still refining rules and thresholds, but the fatigue is gone.

Reflections

“Plan fatigue” is my shorthand for observed patterns like long-horizon unreliability, late-step skipping, or context-driven quality drift in agent chains. If you’re seeing similar issues in validation-heavy or multi-step agents, try progressive instruction reveal + high-gravity naming for the tail steps.

Human parallel: when I’m mentally tired, I skip tedious verification too. A short break for fresh context and reframing the task as “final sign-off” helps me push through—just like it helps the LLM.

There’s a natural tension here: we value LLMs for their flexibility and emergent reasoning, yet when we prescribe every step rigidly, they deviate—just as humans push back against micromanagement. My phased reveal + semantic reframing gives the model fresh context and a sense of “ownership” over the tail steps without fully delegating planning. For strict rule enforcement, the imposed structure (delivered progressively) has proven more reliable in my runs than looser approaches.

Curious if others have hit this and what mitigations worked (phasing, reflection loops, different models, self-generated sub-plans, etc.). Happy to discuss in comments.

Leave a Reply