I’ve been tuning a prompt for weeks. The rules are clearly stated. The AI model, or LLM, keeps misapplying them—cheerfully, confidently, never quite right.

The tell: output is logically incoherent where it’s usually smoothly joined. More explanation doesn’t help. The LLM never says “this is new to me”—it just flails, always misunderstanding, always poorly executed work in this one area.

The workaround that actually helped: Generate first, then reload the rules and verify against the proposed output. This converts a recall problem (hard) into a recognition problem (easier).

The verification pass gives novel rules focused attention without generation noise. It catches misapplied rules—not total oversights, but the subtle errors where the LLM tried and got it wrong.

The above is great for simple validation. For code generation, there’s no good equivalent. You do the novel parts yourself.

Why this happens: “Novel” to an LLM isn’t what humans expect. It’s not just “off the map”—it includes edges of familiar patterns, intersections of common rules that weren’t combined in training, “obvious remixes” that aren’t obvious to the model.

Here’s the key: Novelty impairs processing, turning the LLM into a novice. Same Latin root (novus).

When an LLM hits novel territory, it is a novice in that area—permanently. It can’t learn its way out in-session, just like a human beginner can’t become an expert in 5 minutes.

My theory: LLMs are trained on correct outputs, not the messy process of learning. They never flailed, failed, and integrated feedback. That’s what builds the meta-scaffolding humans use to navigate unfamiliar territory. LLMs skipped it.

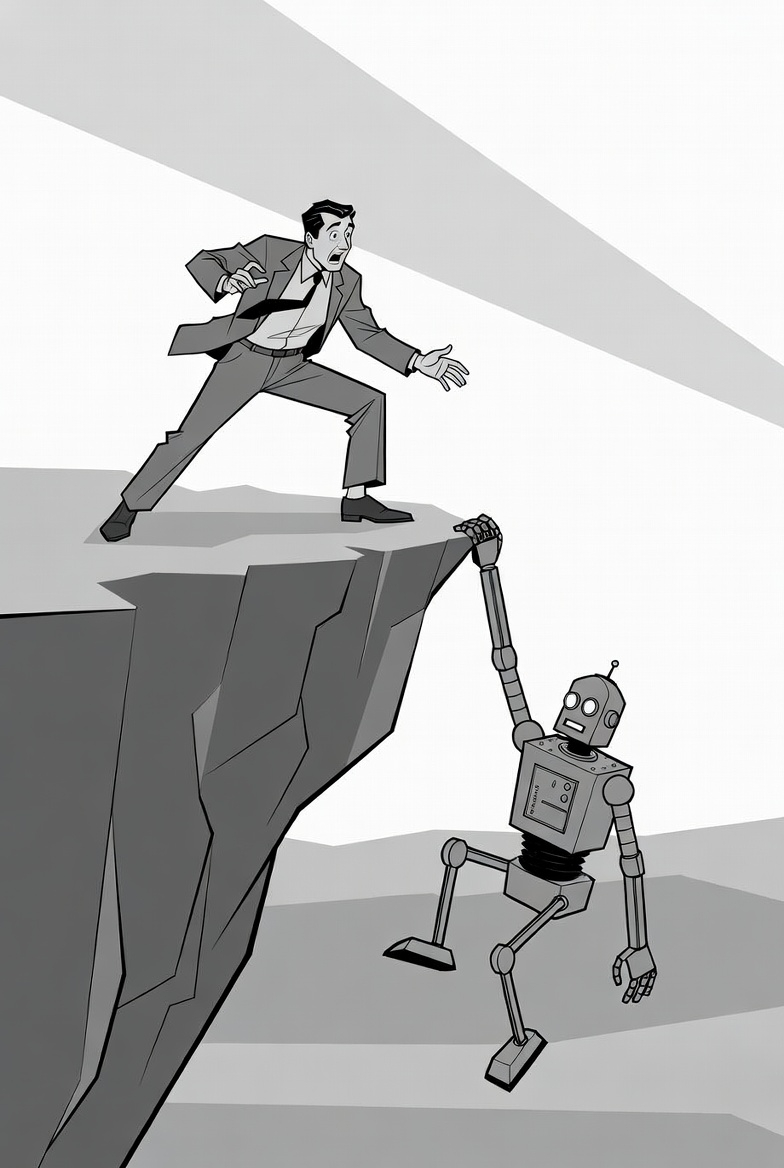

Result: amazing on the map, totally lost off it. No self-awareness that they’re lost.

The hard truth: Stock LLMs are permanent novices in sparse-corpus domains, and those are hard to predict.

They may assist with novice-appropriate scaffolding, but won’t reach expert reliability without deep model training—at which point it’s not the stock model anymore.

When the LLM “just can’t understand,” you either live with a novice or do it yourself.

Leave a Reply